Nvidia NVLink Fusion: Opening AI Server Platforms to Third-Party Chips marks a groundbreaking step in the world of high-performance computing. By enabling seamless integration with third-party accelerators, NVLink Fusion reshapes the AI server landscape and paves the way for more open, scalable, and efficient systems. From hyperscale data centers to enterprise AI infrastructures, Nvidia’s evolution in interconnect architecture is revolutionizing how chips collaborate across platforms.

Table of Contents

Introduction: The Age of Open AI Server Platforms

In an era where artificial intelligence (AI) drives every facet of business and innovation, hardware plays an equally powerful role as the algorithms it runs. At the center of this transformative shift stands Nvidia NVLink Fusion, an advanced interconnect architecture designed to open AI server platforms to third-party chips. This initiative by Nvidia is more than a technological leap—it’s a philosophical pivot toward openness and ecosystem inclusivity.

As businesses move from proprietary stacks to open, modular infrastructures, Nvidia’s latest move offers a welcome change. With Nvidia NVLink Fusion, enterprises can now build AI platforms that integrate Nvidia GPUs with third-party AI accelerators, such as custom silicon, FPGAs, and other ASICs—all on the same server board.

Read Also

- AMD EPYC 9965: Unleashing Unprecedented Server Performance Amidst Power Challenges

- AMD EPYC™ 9965 Review and Compatible Server Platforms: Ultimate Performance for Modern Data Centers

Chapter 1: What Is Nvidia NVLink Fusion?

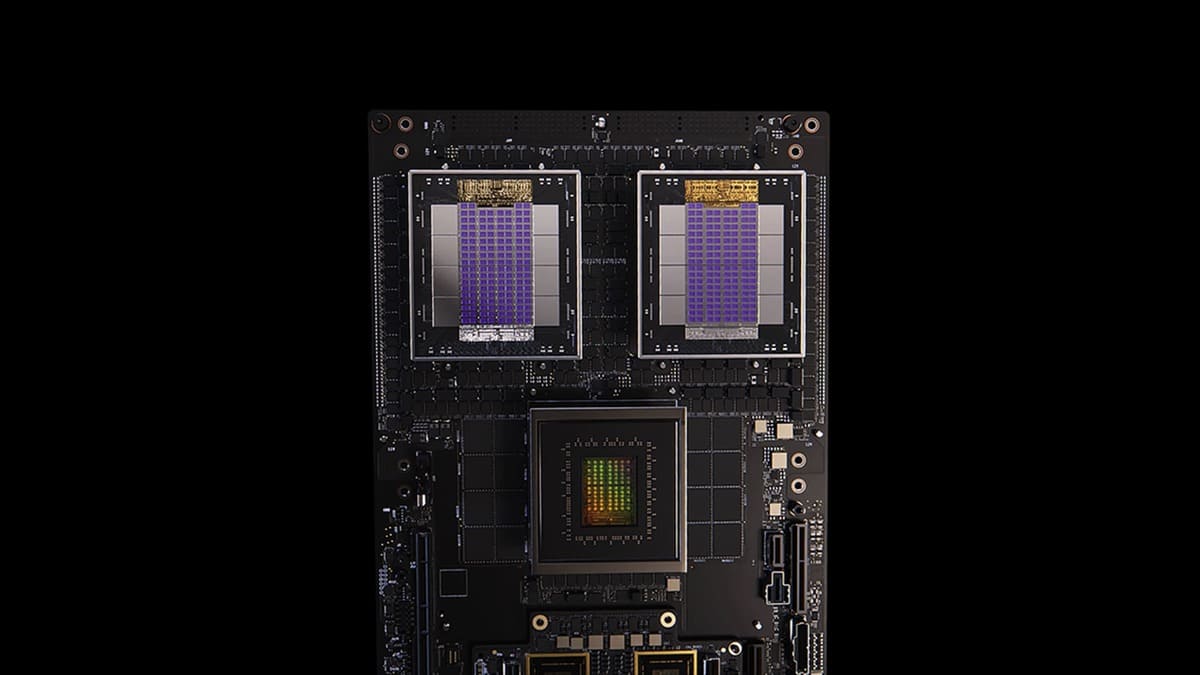

Nvidia NVLink Fusion is an interconnect standard built to allow direct chip-to-chip communication between Nvidia GPUs and third-party accelerators. It extends the capabilities of the existing NVLink interface, breaking the boundaries of closed hardware environments.

Traditionally, Nvidia’s NVLink technology allowed for high-speed data transfer between Nvidia GPUs, enabling faster model training and data throughput. With NVLink Fusion, Nvidia opens this pathway to third-party chips, enabling a heterogeneous AI server architecture.

Key Technical Advancements:

- Supports coherent memory sharing across diverse chips

- Enables CPU-agnostic integration

- Offers ultra-low latency, high-bandwidth data transfer

- Complies with UCIe and CXL standards for interoperability

Chapter 2: Why NVLink Fusion Matters for AI Server Platforms

AI workloads are growing in complexity. They now require a combination of GPUs, CPUs, memory, networking, and specialized AI accelerators. Traditional server setups are bottlenecked by PCIe-based interconnects, which often result in latency and data inconsistency.

Here’s where Nvidia NVLink Fusion transforms the AI server ecosystem:

1. Unifies Diverse Hardware

With NVLink Fusion, server architects can now combine Nvidia GPUs and custom AI accelerators like Google’s TPU, AMD’s Instinct, or custom silicon on the same platform.

2. Reduces Bottlenecks

The point-to-point interconnect model allows direct communication, cutting down delays typical in traditional architectures.

3. Optimizes Power and Efficiency

Instead of replicating data across different accelerators, NVLink Fusion enables shared access to the same memory pool, boosting efficiency and reducing energy consumption.

4. Paves the Way for Modular AI Systems

As organizations need tailored AI stacks, modularity becomes key. NVLink Fusion supports plug-and-play flexibility, letting developers choose the best-in-class component for each task.

Chapter 3: The Technical Anatomy of NVLink Fusion

To truly understand NVLink Fusion’s significance, we must explore its technical foundation:

a. NVSwitch Fabric

The heart of the system is the NVSwitch, a fabric that routes data between all connected components. It acts as a highway junction for packets from various GPUs and third-party chips.

b. Cache Coherency Engine

This component ensures that all processors access the same up-to-date memory, eliminating discrepancies between devices working on the same dataset.

c. CXL & UCIe Protocol Support

NVLink Fusion supports Compute Express Link (CXL) and Universal Chiplet Interconnect Express (UCIe)—open standards that guarantee compatibility with non-Nvidia chips.

Chapter 4: Compatibility with Third-Party Chips

The defining feature of NVLink Fusion is its support for third-party chipsets, turning once-rigid systems into flexible AI ecosystems.

Examples of Compatible Chips:

- AMD Instinct MI300: A powerful AI chip known for high memory bandwidth

- Google TPU: Specialized tensor processing units built for deep learning

- Custom ASICs: Designed for specific tasks like NLP or image recognition

The result? AI platforms that can leverage task-specific chips alongside general-purpose GPUs, delivering optimized performance across workloads.

Chapter 5: Use Cases Across AI Ecosystems

Nvidia NVLink Fusion will impact nearly every domain where AI servers are deployed. Let’s explore some of the most significant applications:

1. Hyperscale Data Centers

Companies like Microsoft, Google, and AWS can now mix Nvidia GPUs with in-house chips to reduce cost and increase specialization.

2. Autonomous Vehicle Training

Combining GPUs with chips that specialize in sensor fusion or vision processing accelerates simulation environments.

3. Genomics and Drug Discovery

Heterogeneous hardware combinations improve performance in DNA sequencing and protein folding models.

4. Large Language Model Training

Training massive models like GPT-5 or PaLM 2 now benefits from cross-chip collaboration, improving both speed and efficiency.

Chapter 6: Challenges and Considerations

While the vision is promising, several challenges remain:

- Vendor Collaboration: Successful implementation requires cooperation between Nvidia and third-party vendors.

- Software Stack Compatibility: Ensuring TensorFlow, PyTorch, and CUDA support for non-Nvidia chips will be key.

- Thermal Management: Adding more chips to a server increases heat and power demands, which must be addressed.

Still, with Nvidia’s vast ecosystem and the growing interest in open AI architectures, the momentum is clearly in NVLink Fusion’s favor.

Chapter 7: Nvidia’s Strategic Shift Toward Openness

Nvidia has historically guarded its ecosystem, but with the advent of NVLink Fusion, the company signals a paradigm shift.

Why the change? The AI landscape is evolving:

- Companies demand more control over their hardware stacks

- Open-source communities push for standardization

- Nvidia wants to remain central even as competition in AI hardware intensifies

This shift reflects a strategic recognition that collaboration and modularity are the future.

Chapter 8: NVLink Fusion vs. PCIe and CXL

Let’s compare NVLink Fusion with other dominant interconnect technologies:

| Feature | NVLink Fusion | PCIe 6.0 | CXL 3.0 |

|---|---|---|---|

| Bandwidth | 900 GB/s+ | 256 GB/s | 512 GB/s |

| Coherency | Full | Limited | Full |

| Chip Agnostic | Yes | Yes | Yes |

| Latency | Ultra Low | Moderate | Low |

| Proprietary | Partially | No | No |

NVLink Fusion stands out by offering both high performance and openness, a rare combination in today’s AI infrastructure market.

Chapter 9: The Future of Modular AI Hardware

As NVLink Fusion gains adoption, we’ll see a wave of modular AI servers, where companies can:

- Add or remove chips based on workload

- Mix vendors to optimize for performance and budget

- Future-proof hardware investments

Nvidia’s roadmap suggests upcoming GPUs will all feature NVLink Fusion support, setting a new standard for interoperability in AI computing.

Chapter 10: How to Build an NVLink Fusion AI Server Platform

Want to build your own AI server using NVLink Fusion? Here’s what you’ll need:

- Compatible Nvidia GPUs (Hopper, Blackwell, etc.)

- NVLink Fusion switch fabric

- Third-party AI accelerator cards

- UCIe/CXL backplane or bridge chips

- Power-efficient chassis with advanced cooling

Ensure you use a server platform that supports dynamic resource scheduling, which allows different chips to be assigned tasks based on AI workload profiles.

Chapter 11: Industry Reactions and Analyst Predictions

Industry experts see NVLink Fusion as a pivotal move toward open AI architectures.

“It’s the kind of open infrastructure that hyperscalers have been dreaming of.” — Gartner Analyst

“NVLink Fusion will push Nvidia even further ahead by enabling flexibility without sacrificing performance.” — IDC Report

Chapter 12: Final Thoughts

Nvidia NVLink Fusion: Opening AI Server Platforms to Third-Party Chips isn’t just a product launch—it’s a manifesto for the future of AI hardware. By embracing openness and interoperability, Nvidia is not only expanding its own influence but also democratizing AI infrastructure.

As AI use cases diversify and workloads evolve, this move may prove to be one of the most influential in shaping next-gen AI server platforms.

✅ Conclusion

If you’re building or upgrading AI server platforms in 2025 and beyond, Nvidia NVLink Fusion should be on your radar. Whether you’re a data center architect, a CTO, or an AI researcher, the ability to integrate best-in-class accelerators—regardless of vendor—will unlock new levels of performance, scalability, and flexibility.